climpred.metrics._msess

climpred.metrics._msess#

- climpred.metrics._msess(forecast: xarray.Dataset, verif: xarray.Dataset, dim: Optional[Union[str, List[str]]] = None, **metric_kwargs: Any) xarray.Dataset[source]#

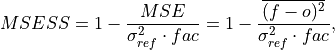

Mean Squared Error Skill Score (MSESS).

where

is 1 when using comparisons involving the ensemble mean (

is 1 when using comparisons involving the ensemble mean (m2e,e2c,e2o) and 2 when using comparisons involving individual ensemble members (m2c,m2m,m2o). See_get_norm_factor().This skill score can be intepreted as a percentage improvement in accuracy. I.e., it can be multiplied by 100%.

Note

climpreduses a single-valued internal reference forecast for the MSSS, in the terminology of Murphy [1988]. I.e., we use a single climatological variance of the verification data within the experimental window for normalizing MSE.- Parameters

forecast – Forecast.

verif – Verification data.

dim – Dimension(s) to perform metric over.

comparison – Name comparison needed for normalization factor fac, see

_get_norm_factor()(Handled internally by the compute functions)metric_kwargs – see

xskillscore.mse()

Notes

minimum

-∞

maximum

1.0

perfect

1.0

orientation

positive

better than climatology

> 0.0

equal to climatology

0.0

worse than climatology

< 0.0

References

Example

>>> HindcastEnsemble.verify( ... metric="msess", comparison="e2o", alignment="same_verifs", dim="init" ... ) <xarray.Dataset> Dimensions: (lead: 10) Coordinates: * lead (lead) int32 1 2 3 4 5 6 7 8 9 10 skill <U11 'initialized' Data variables: SST (lead) float64 0.8268 0.8175 0.783 0.7691 ... 0.4753 0.3251 0.2268 Attributes: prediction_skill_software: climpred https://climpred.readthedocs.io/ skill_calculated_by_function: HindcastEnsemble.verify() number_of_initializations: 64 number_of_members: 10 alignment: same_verifs metric: msess comparison: e2o dim: init reference: []